Research

Cameratracking Sunphotometer(More information coming soon) |

|

ESCAPE(More information coming soon) |

|

Obstacle Avoidance(More information coming soon) |

|

Multi-UAV(More information coming soon) |

|

Robotic Exoskeleton for the Stabilization of Tremors(More information coming soon) |

|

Autonomous Surface VesselThe autonomous surface vessel or Autoboat is a research platform under development that will be used for the exploration of control algorithms. This work is also part of a collaboration with the Monterey Bay Aquarium Research Institute in developing a long-term platform for oceanographic studies. The surface vessel is 15' long drafting only 3' and includes over 1kW of solar charging, a top speed of 5 knots, and a long-range wireless transceiver. The project details are included within the Github repository and the lab's own wiki. |

|

|

Overbot (Retired)The Overbot was a contender during the 2005 DARPA Grand Challenge. It made it as far as the qualification event before it failed to complete the course. It now serves as a research platform for path planning and autonomous vehicle research here at UCSC. |

Laser CaneThe most common mobility device for the blind is still the traditional long cane. This economical, simple, and reliable tool allows the user to extend touch and "preview" the lower portion of the foreground as one walks. However, not all visually impaired individuals are able to use the long cane. Furthermore, a rigid cane is an "invasive" tool, and often ill-suited to social gatherings, public transportation, or congested areas where it may trip pedestrians. To address such problems, Manduchi's engineering group has developed a prototype mobility tool that adds a high-tech twist to the "long cane paradigm." In this case, instead of using a physical cane, a user scans the scene with a laser-based range sensing device. As the user moves the hand-held laser about, an onboard processor analyzes and integrates spatial information into a "mental image" of the scene, which is delivered to the user by means of a tactile interface. In collaboration with Prof. Manduchi, we are developing a dual use prototype that can act both as a device for the visually impaired, as well as a sensor for mobile robotics. The basic premise is to project a laser fan into the environment, with the stereo vision correspondence problem solved explicitly through initial calibration, and use the reflected return to determine points and planes of obstacles. |

|

|

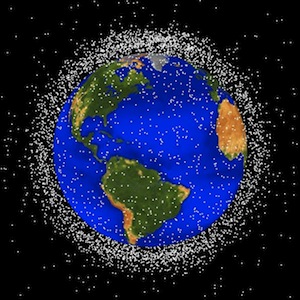

Space Traffic ManagementWhile debris in low earth orbit eventually de-orbits due to atmospheric drag, anything in higher orbits will take many decades or centuries to reenter that atmosphere. Proposals for any kind of orbital “sweeper” remain too costly and far fetched to be effective, and there currently is neither clear legal liability nor established venues to seek redress. The problem is here, will not go away on its own, and without any further intervention, will continue to worsen. Near earth space is on the way to becoming another example of the “tragedy of the commons.” Our efforts are focused on trying to improve the orbit position and velocity estimation from the public data, in order to reduce the uncertainty in the conjunction analysis. This work is funded by NASA University Affiliated Research Center (UARC). |

Slugs AutopilotThe SLUGS autopilot is the result of a collaboration between the ASL and the Naval Postgraduate School in Monterey, CA. It's primary design considerations were easy reprogrammability, to facilitate testing of new control algorithms, and low cost, to be available to even the least-funded of educational institutions. It also had to be powerful enough to handle the various actuators and sensors required for full control of an aircraft. The final hardware is comprised of two Microchip DSCs, one to handle control and the other for sensor fusion. Using two DSCs allows for very fast execution of most processes at 100Hz, with some lower-priority items at 10Hz. The Autopilot is written almost entirely in Simulink, with the hardware drivers and glue code written in C. With Simulink users are able to easily change the control algorithms that run onboard. Simulation can easily be tested before execution onboard a plane through either computer simulation in Simulink or via hardware-in-the-loop relying on both the groundstation and the actual autopilot hardware. SLUGS Autopilot |

|

|

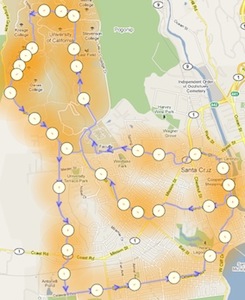

Personal Rapid Transit (PRT)Personal Rapid Transit (PRT) is an electric-powered, autonomous transportation system. The PRT emulates a car’s individual, modular architecture, and avoids the pitfalls of bus and rail mass transit without requiring fuel cells or advanced battery technology. In this design, lightweight two- to four-passenger vehicles run along an elevated guideway, which can be built over existing roads. Traffic is managed by a control system that functions much like packet routing over the Internet (with non-destructive arbitration). We intend to build two simulators, one to study the effects of the PRT on traffic congestion and the other on energy use (at UC Berkeley). The first simulator will model traffic patterns to test network design and estimate congestion. It will also be used to validate control algorithms generated both from within this project and by external PRT developers. This simulator will also allow for comparison between conventional mass transit systems such as busses or subways and a PRT-based mass transit system. Similarly, the energy simulator will be used to estimate the impact of PRT on energy requirements and CO2 emissions. Local governments can use these tools to evaluate potential PRT designs while helping to benchmark the goals mandated by the AB32 measure. With these two simulators to demonstrate system viability, the hope is to establish a full center and bring PRT to California as the next generation of transportation system. Personal Rapid Transport Simulator |